Supporting a customer-focused content site for all of NSW government.

In 2022, The NSW Department of Customer Service embarked on the mammoth task of merging content across many department and agency websites under one umbrella: NSW.gov.au. As they rolled out a new 20 topic taxonomy for this content, I planned, conducted and analysed quarterly user testing studies to validate wayfinding, information architecture and design decisions with users.

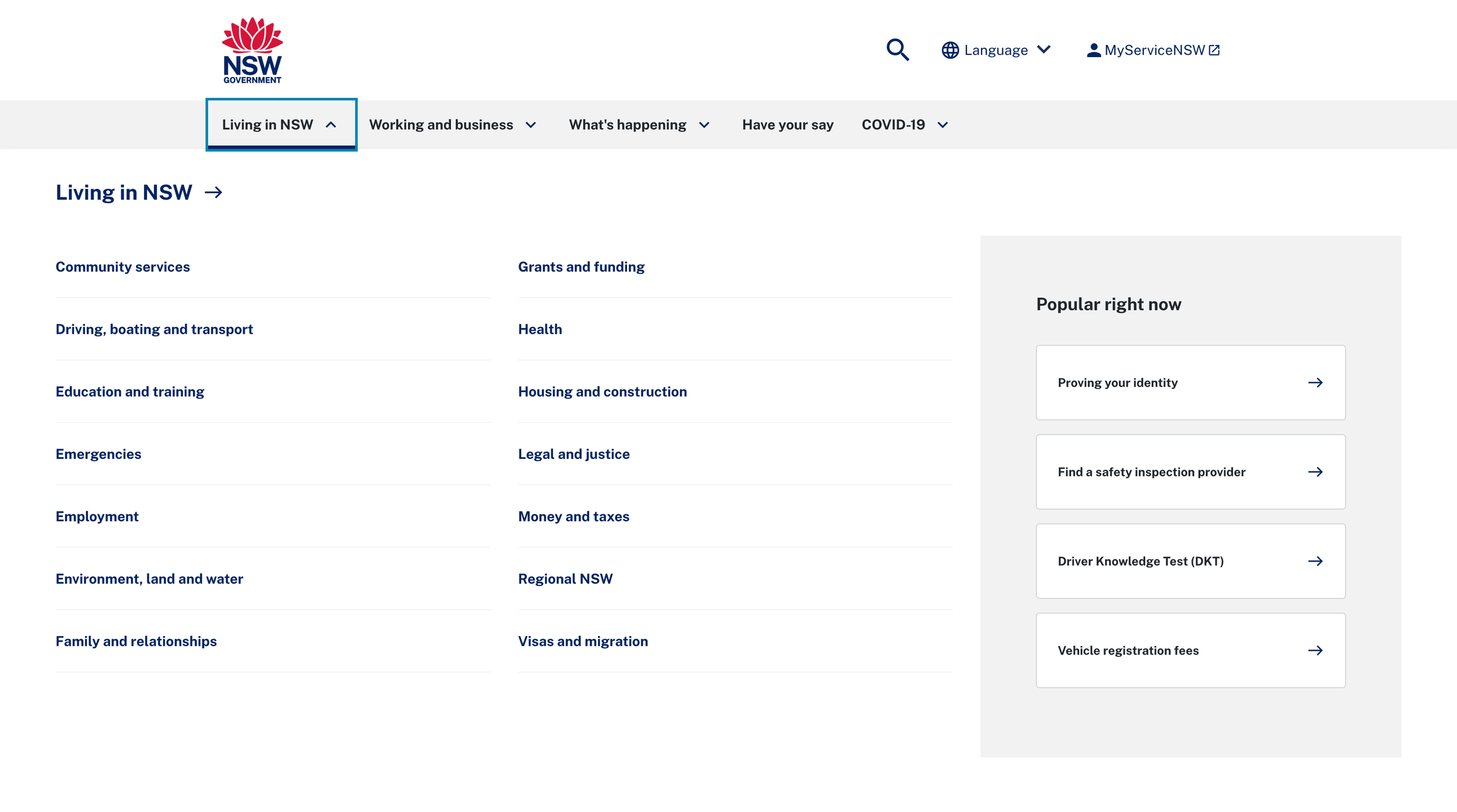

Mega menu as an improved solution to navigating a dense information site.

The research aimed to uncover usability issues, gain customer and stakeholder trust, and identify opportunities to drive value.

As a wide variety of government agencies brought their content on board, the NSW Digital Channels team needed to assure their agency partners that users could find and engage with their content.

Similarly, if visitors to the site could reliably locate the information they were looking for this would engender trust in the NSW Government.

User testing was an opportunity to identify recurring issues (and take action on them) but is also allowed the CX team to add value to the experience of users.

A mixed methods approach for usability and content

For this project, I decided on two different methods to fit the budget and objectives:

Usability interviews facilitated remotely using Lookback

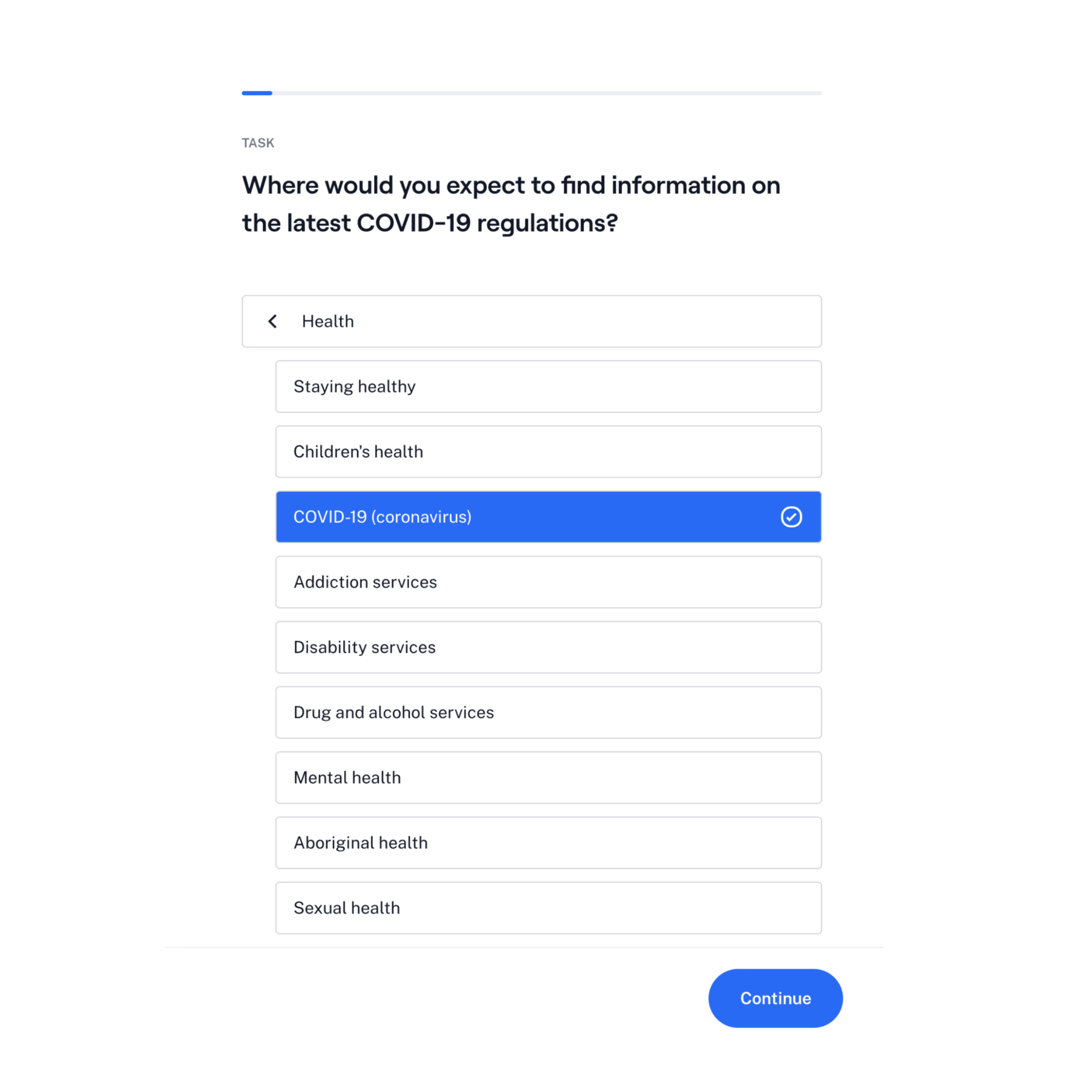

Treejack testing using Maze

The content team initially asked for 10 usability interviews focusing on navigational tasks on the live site each quarter. Their priorities were capturing verbatim and hearing feedback from as many people as possible.

I noticed that this method would make it difficult to assess the topics themselves and that for usability testing, 5 participants is sufficient to uncover most usability issues and achieve saturation. Instead, I recommended using a mix of moderated usability testing and unmoderated Treejack tasks to validate users’ ability to find information under the 20 topics and their 88 respective subtopics.

For the same cost of recruiting 5 people for 1 hr interviews I was able to recruit 35 for a 15 minute unmoderated task and each successive quarter test a different set of subtopics until all were covered. Moderating the interviews with Lookback allowed me to take my own notes and use transcripts for analysis, which freed up time to analyse the Treejack responses. It also allowed the content designers working on the project to observe sessions.

Recruitment

Each round of interviews, I selected Askable participants so that the cohort represented the diverse population of NSW; a mix of ages, genders, post codes, employment type and home ownership status (where relevant based on topic pages tested). For the Treejack studies, eligible participants were selected automatically.

Interview and task structure

Usability interviews consisted of information-seeking tasks that I observed users complete, mixed with probing questions where needed. I noted assisted or independent task completion, task time and expressions of confusion or frustration.

Treejack tasks asked participants to indicate which topic they would expect to find the answer for a given query. Their responses were then compared to the expected response (based on content live on the website). In some rounds self reported difficulty ratings were also captured.

Analysis and reporting

I synthesised the interviews by reviewing the transcript generated by Lookback, supplementing where needed and copying responses into an excel spreadsheet.

I organised the quotes according to the task and question asked, adding a column for my summary of the issue, observations about the steps the participant took and any unanticipated feedback that was valuable. I colour coded any cells that had recurring themes that made it easy to ladder up findings across the sessions.

I maintained the same spreadsheet for the 4 studies so that themes across sessions could also be captured. This also allowed for comparison when the testing stimulus included iterations of a design over time.

For the Treejack results I recorded the following into the spreadsheet:

the question asked and the expected response

the most common response (and % of respondents who answer this way)

the second most common response (and % of respondents who answer this way)

average difficulty rating

I used these to identify where participants were getting confused, where subtopics may be closely related or not accurately labelled for the content they contain and any other issues.

Supporting a business case for a better way to navigate to deeper level content

The lack of visibility of content under the nav link ‘Living in NSW’ was a recurring theme across multiple studies. Participants would frequently express their desire for it to be a dropdown so they knew what that heading contained as the label was so broad. The CX team was already working on a mega menu. While this was in progress, the feedback gathered from testing helped build a business case and validated its value in the final round of testing when it was live on the site.

Identifying related content to promote browsing behaviour and discovery of helpful information

Across the 20 topics and their 88 subtopics, I was able to identify where information overlapped. This was an opportunity to identify useful pages to link in ‘related information’ modules and multiple paths a user might take to the same information. This enriched the content designers’ understanding of how their users interact with and understand their content.

Outcomes and Impact

Research as part of an iterative, user-centred design approach to content

The research was part of the iteration process as designs for the topic landing pages were live on the site. I was able to pass on constructive feedback to the designers about the hierarchy of information and clearness of labels in the third study. By the fourth, the designers had changed the topic pages based on user feedback and I was able to validate that the information presented was clear and comprehensive.

“Caitlin is a skilful and empathetic researcher, who is able to draw useful insights from a hugely varied range of participants. Every DCU team member who did make the time to observe a moderated session was very impressed with the usability investigations that she led. Caitlin was then able to analyse the results of both the moderated and unmoderated sessions and package them up into reports that not only were able to draw consistent findings from across all participants, but also gave recommendations for specific remediation actions where appropriate.”

NSW.gov.au Digital Channels Unit